Ever wondered what makes AI agents like ChatGPT tick? These smart assistants can chat, solve problems, and even write code — but most people don’t know how AI agents work.

Think of AI agents as smart systems that combine three key abilities: they can understand their environment, think through problems, and learn from experience — just like humans do, but in their own unique way.

Want to really understand what these AI agents can (and can’t) do? In this guide, we’ll walk you through their key mechanisms, peek into their architecture, and show you how they learn and make decisions.

Ready to understand how AI agents work? Let’s dive in.

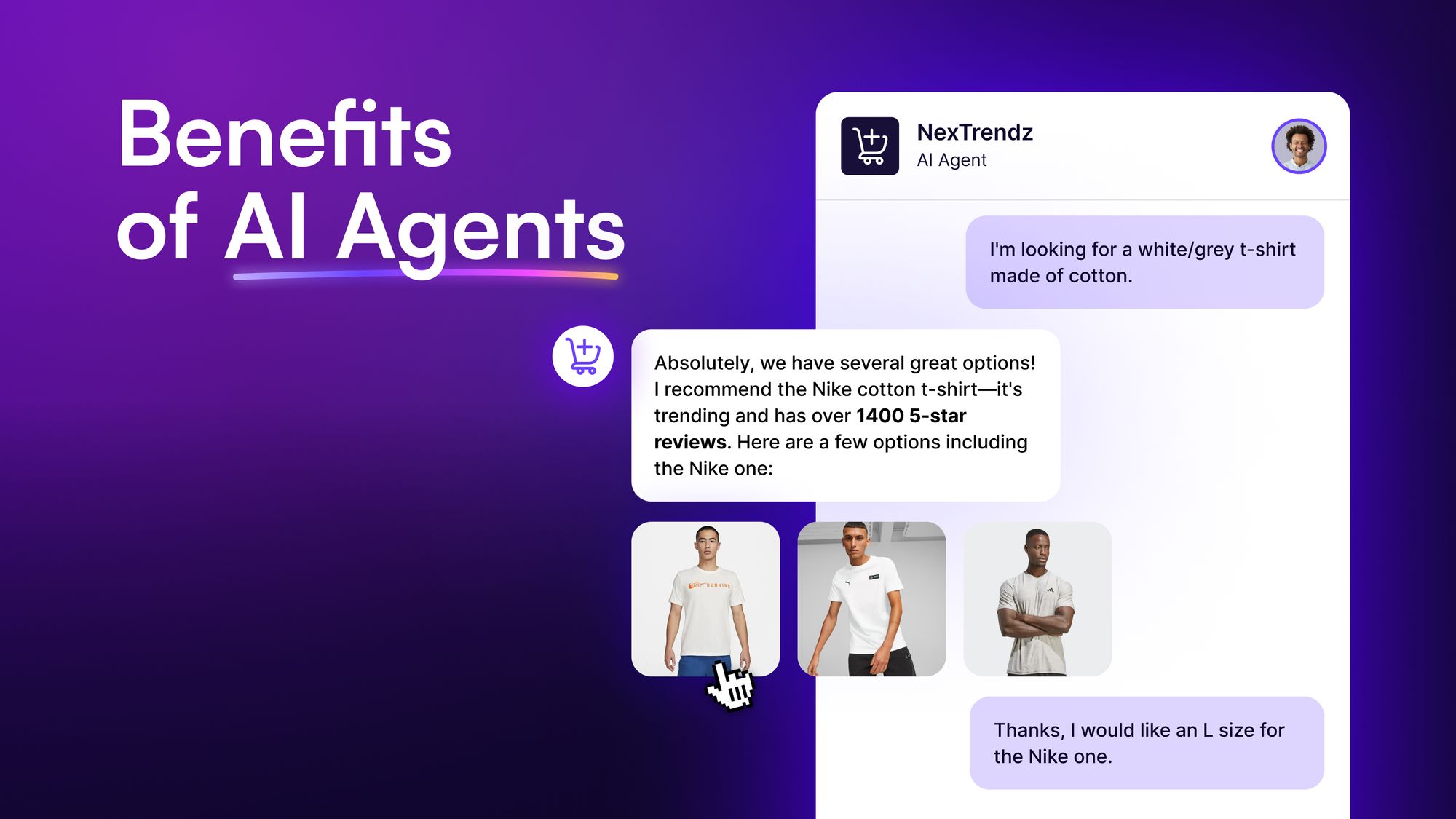

Read our complete guide on what is an AI agent to learn more about their benefits, use cases, and implementation best practices.

How do AI agents work: An overview

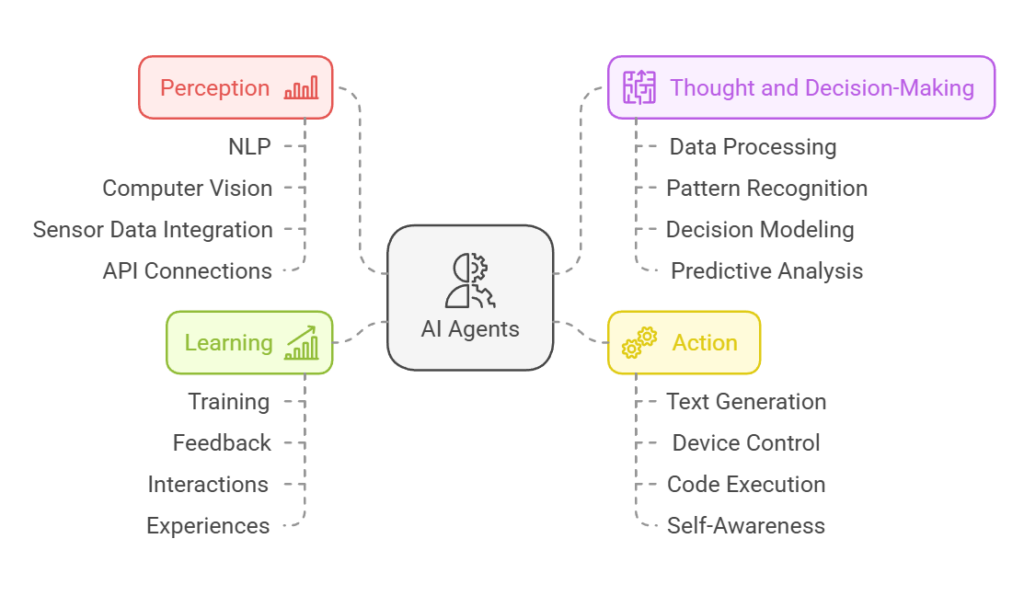

AI agents work in three simple steps: perceive, think, and act. When AI agents sense new data, either in their surroundings or through input, they first perceive it to gather information. Then, they process this information and analyze it to make a decision. Finally, they take execute the decision by taking the best course of action based on the perceived data.

Throughout this process, they also continuously learn from experience and remember lessons that they can use in the future.

In more than one way, the working process of AI agents is quite similar to how humans function. That’s because they are designed to think and act like humans — rationally and autonomously.

Let’s go into more detail about how AI agents work by discussing the steps:

Perception

AI agents start by gathering information from their environment through various inputs. Picture perception as the agent’s sensory system. Just like we use our eyes and ears, these agents have ways to gather and understand information from their world.

To do this, AI agents use different data perception methods, including:

- Natural Language Processing (NLP) for text and speech input

- Computer Vision for image and video analysis

- Sensor data integration for environmental information

- API connections for accessing external databases and services

Thought and decision-making

Once they’ve gathered information, AI agents start their thinking process. They look at the data and figure out what to do next — similar to how we weigh our options before making a choice.

The thought and decision-making phase involves multiple steps, like:

- Data processing: Utilizing advanced algorithms to interpret and analyze the collected information.

- Pattern recognition: Identifying trends and relationships within the data.

- Decision modeling: Evaluating potential actions and their outcomes.

- Predictive analysis: Forecasting future scenarios based on current and historical data.

This process is powered by a number of large language models (LLMs) such as GPT-4, Claude 3.5, and Gemini. These models serve as the “brain” of the AI agent, which helps them understand the nuances of complex queries and generate contextual, human-sounding responses.

Action

After they’ve processed the information and made decisions on the best course of action to choose, they can finally execute the actions. This may involve generating text responses, controlling connected devices, executing code, or performing calculations.

However, there’s also a critical self-awareness stage when taking action. That is, AI agents also know when to ask for help or redirect a query to humans if they don’t feel capable of making decisions with the given data.

Learning

One of the best parts of how AI agents work is that they learn continuously. Even though they are initially trained using data, new experiences and feedback is what makes AI agents more useful in custom use cases.

Mainly, AI agents learn through training, feedback, interactions, and experiences. We’ll discuss this later in detail.

This learning ability lets them tackle harder tasks and handle new situations. The more they work, the better they get.

What makes these agents special is how they combine all four parts — sensing, thinking, doing, and learning – into one smooth operation. They process huge amounts of data, make smart choices, take action, and keep getting better at it.

While these steps broadly describe how AI agents work, their actual workflow is more complex and is made up of their core architecture, reasoning paradigms, and learning mechanisms.

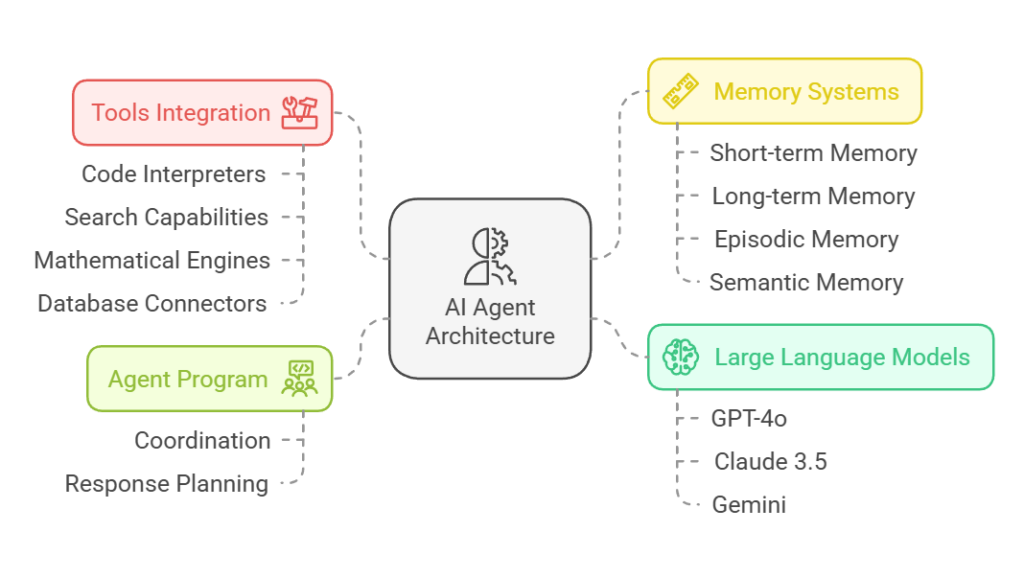

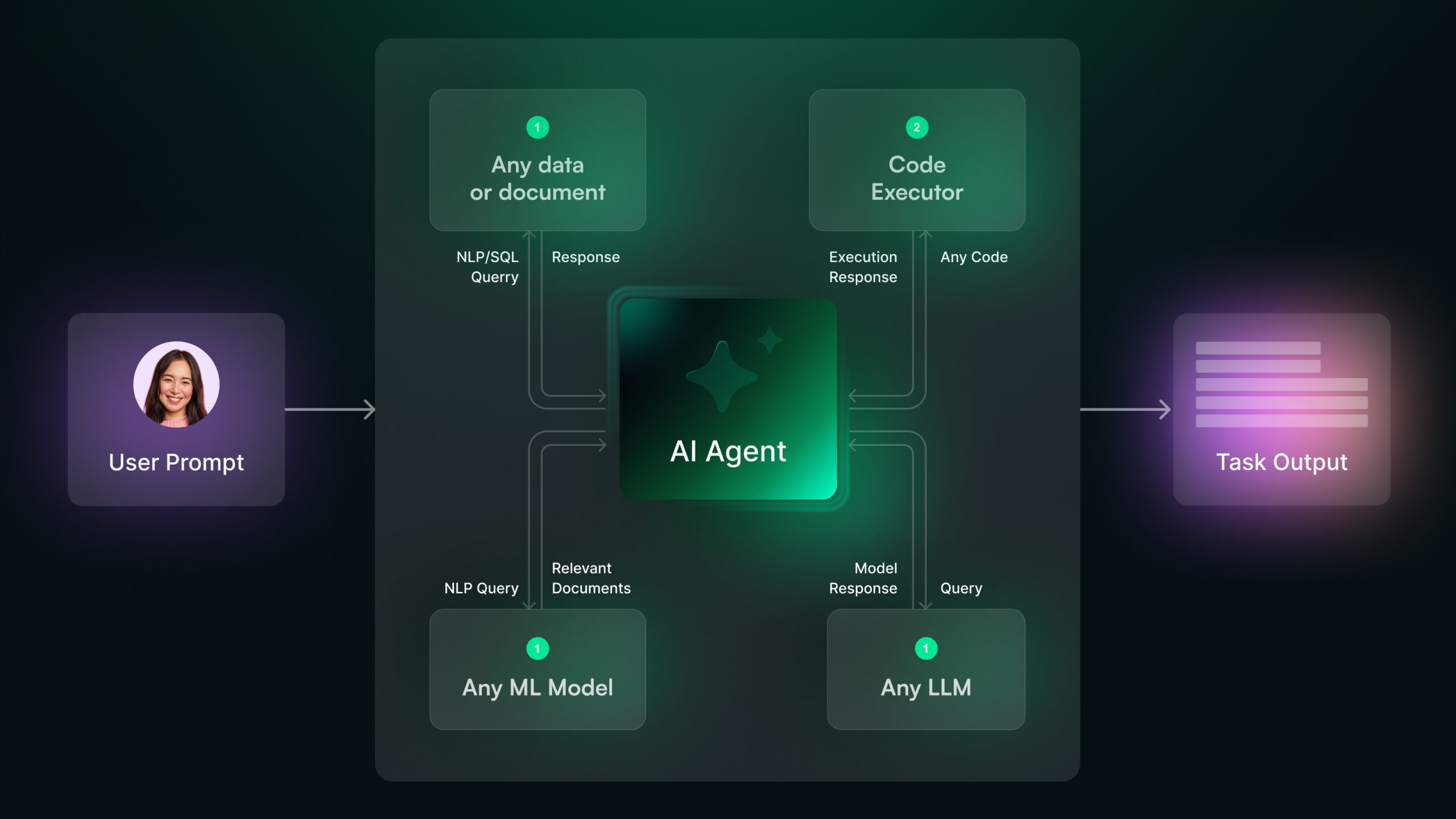

Key Components of AI Agent Architecture

We now have a good grasp of AI agents’ simple workflow. Let’s take a closer look at their architectural components and see how these sophisticated systems process information and make decisions.

Large Language Models (LLMs)

Modern AI agents have a Large Language Model (LLM) at their core that acts as the system’s “brain.” These models help them understand the nuances of language, allowing them to process queries and give answers in plain, simple, human language.

GPT-4o, Claude 3.5, and Gemini are some popular LLMs. Some AI companies also use proprietary models to build their agents.

Recent developments in LLMs have made AI agents more sophisticated and powerful. Now, AI agents can generate outputs that are quite human-like and accurate.

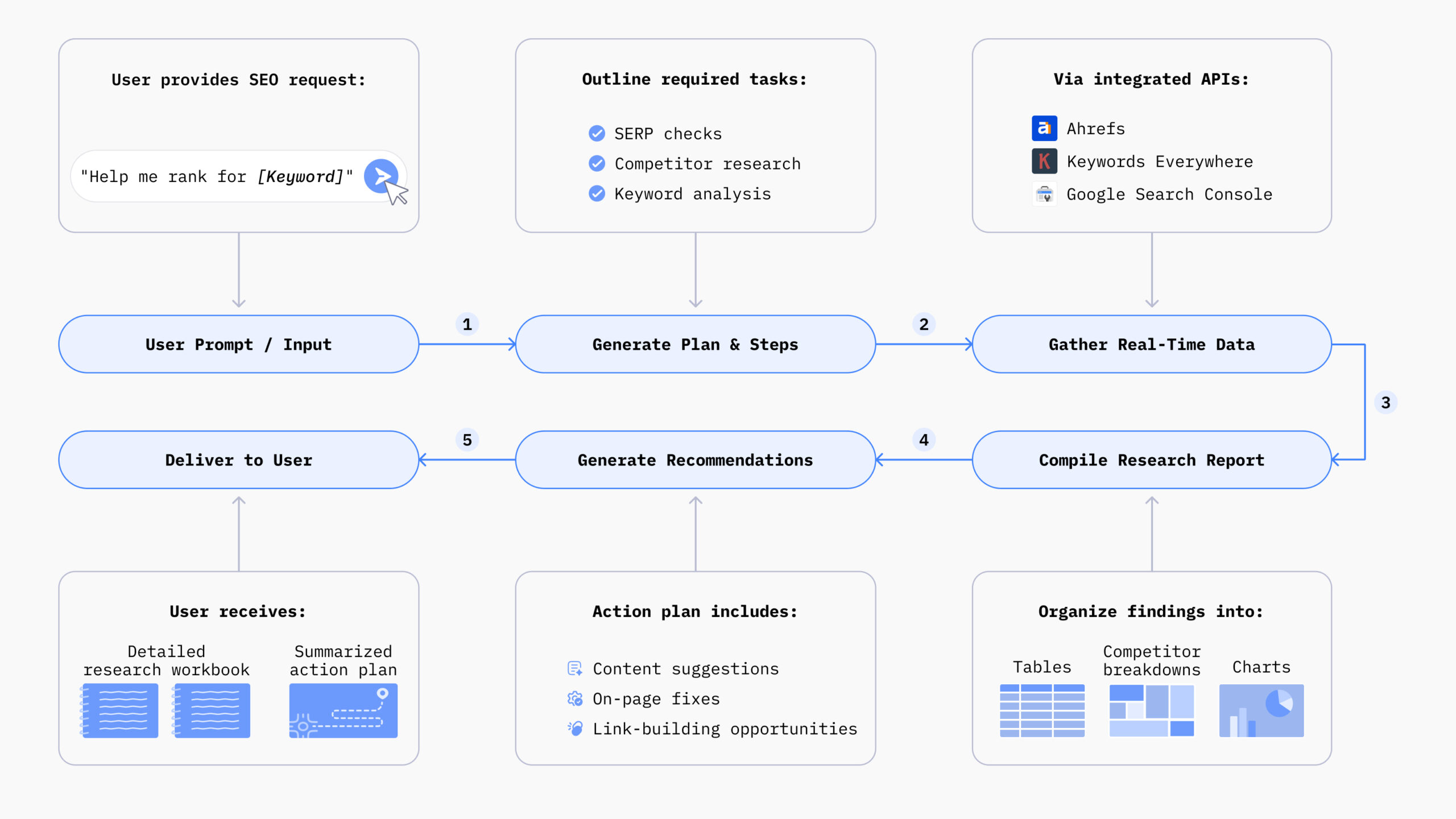

Tools Integration

AI agents do more than generate text. They connect with external tools that work like the agent’s hands to interact with databases, APIs, and other software systems. These tools include:

- Code interpreters to execute programming tasks

- Search capabilities to access current information

- Mathematical engines to handle complex calculations

- Database connectors to store and retrieve data

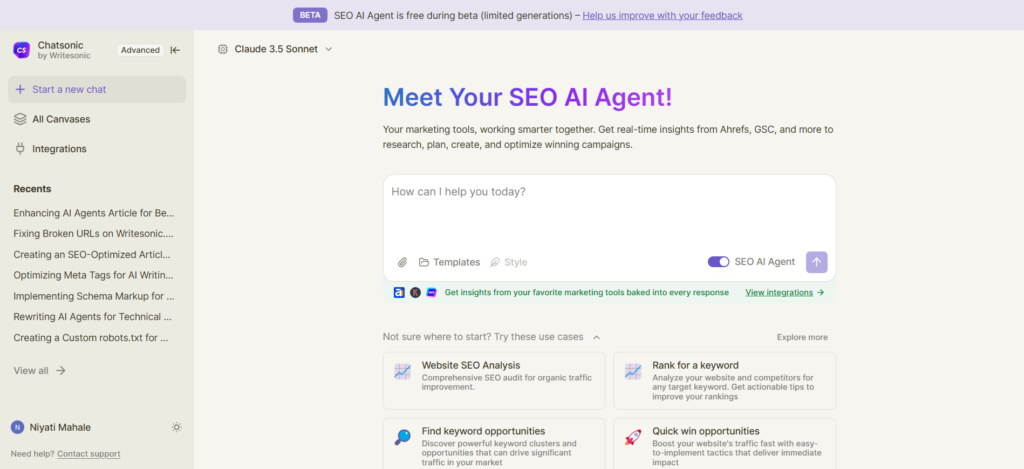

Take Chatsonic for example. The AI marketing agent has SEO, content writing, publishing, and other connected tools that make it a one-stop solution for all marketing requirements.

Read our guide on AI marketing agents to know more about how you can use them for your marketing strategy.

Memory Systems

AI agents have their own memory which helps them understand the context of conversations. If you’ve ever found ChatGPT remembering details from past conversations, that’s due to its memory system.

In general, how AI agents work is they use four types of memory systems:

- Short-term Memory: Keeps track of ongoing conversations and current task progress.

- Long-term Memory: Holds complete information about past interactions and learned experiences. This helps create more tailored and context-aware responses.

- Episodic Memory: Remembers only certain conversations and details that are necessary for future conversations.

- Semantic Memory: Holds long-term general knowledge that isn’t affected by specific events or experiences.

Agent Program

The agent program brings all these pieces together into one system. The “agent core” coordinates the core logic and behavioral traits. This program connects different components and decides how the agent should respond to various situations.

The four-step architecture plan works well for both simple and complex tasks. The agent program first triggers the LLM to understand a user’s request. Then it works with the right tools, checks its memory for context, and creates a response that considers everything.

Reasoning Paradigms of AI Agents

In addition to architecture, AI agents need sound reasoning capabilities to make effective decisions. Most AI agents use these two reasoning paradigms to process data and make decisions.

ReACT

ReACT (Reasoning + Action) is a powerful method that blends step-by-step reasoning with action execution. This approach helps AI agents think systematically about their decisions before taking action. Our tests revealed that ReACT cuts hallucination rates to just 6% compared to 14% with traditional chain-of-thought methods.

ReACT’s special quality comes from its three-step cycle:

- Thought Phase: The agent reasons about the current situation

- Action Phase: It executes a specific tool or function

- Observation Phase: It analyzes the results before proceeding

This methodical approach helps agents maintain better context and make informed decisions.

ReWOO

ReWOO (Reasoning Without Observation) builds on ReACT’s properties but addresses some key limitations. This approach separates the reasoning process from external observations and optimizes efficiency.

The ReWOO framework works through three distinct modules:

- Planner: Creates complete blueprints to solve tasks

- Worker: Executes planned actions using appropriate tools

- Solver: Blends results to produce final solutions

ReWOO also has the ability to reduce computational complexity. Planning actions upfront instead of mixing reasoning and observation has decreased token consumption and optimized overall efficiency.

These reasoning methods represent vital advances in AI agent’s information processing and decision-making abilities.

How AI Agents Learn

AI agents have remarkable learning abilities. In fact, this is one of the key factors that differentiate AI tools from other software-based chatbots.

Here’s how AI agents continue learning:

Learning from examples

AI agents excel at learning from examples much like humans do. We can train these agents with example datasets, and they will eventually apply the data whenever they find similar queries. The agents are able to make a connection between the examples and similar information, even if they aren’t trained on the exact data.

Learning from experiences

Experiential learning really makes AI agents stand out. They can change their decision-making based on experience instead of just following preset rules. The agents keep an internal model of the world that updates with each interaction. This helps them:

- Process live data better

- Adapt as environments change

- Create smarter problem-solving strategies

- Learning from environment feedback

AI agents use smart mechanisms to boost their performance with environmental feedback. They use reinforcement learning to get feedback from their environment and adjust their behavior.

Learning from human feedback

Human feedback gives AI agents their most valuable chance to learn. Reinforcement Learning from Human Feedback (RLHF) has led to amazing improvements in how agents perform. The agents learn through a reward model that represents priorities and guides their learning process.

The reward model needs surprisingly little comparison data to work. Research shows that making the reward model bigger works better than just adding more training data. This makes RLHF quick and practical for ground applications.

These learning mechanisms work great together. You can combine example-based learning with human feedback to help agents understand things better and give more relevant responses. They can even say no to inappropriate questions and adapt based on user priorities.

While AI agents are highly capable, choosing an advanced AI agent always yields the best results.

Final Thoughts: How do AI Agents Work?

AI agents work quite similarly to how humans function. They perceive data from their surroundings, think and make decisions, and then take action. All the while, they also keep learning from examples and surroundings.

Due to these capabilities, many businesses have been adopting AI agents to improve their workflow depending on the use cases. Chatsonic, for example, is used by many marketers for tasks like creating content and optimizing it for search engines.

The advanced AI marketing agent has integrated tools and multiple use cases, which makes it a necessary addition for every marketing team.

If you’re also looking for an AI agent to improve your marketing process, Chatsonic’s a platform to try out.

Ready to implement AI in your workflow? Sign up for Chatsonic today.

![How to Scale Your Business Using B2B AI Agents [+ Tools to Try]](/wp-content/uploads/B2B-AI-Agents-scaled.jpg)

![40 AI Agent Use Cases Across Industries [+Real World Examples]](/wp-content/uploads/AI-Agent-Use-Cases-1-scaled.jpg)