AI agents streamline your business operations with their autonomy and intelligence. But how do you ensure they’re reliable, ethical, and efficient?

In this guide, we’ll explore the top AI agent best practices and ethical considerations to effectively implement them. We’ll discuss how to reduce hallucinations and bias, how to effectively govern and protect data, and also how to properly include human oversight.

Curious about how to unlock the full potential of AI agents while safeguarding trust and accountability? Dive in and discover the key strategies for successful AI agent integration.

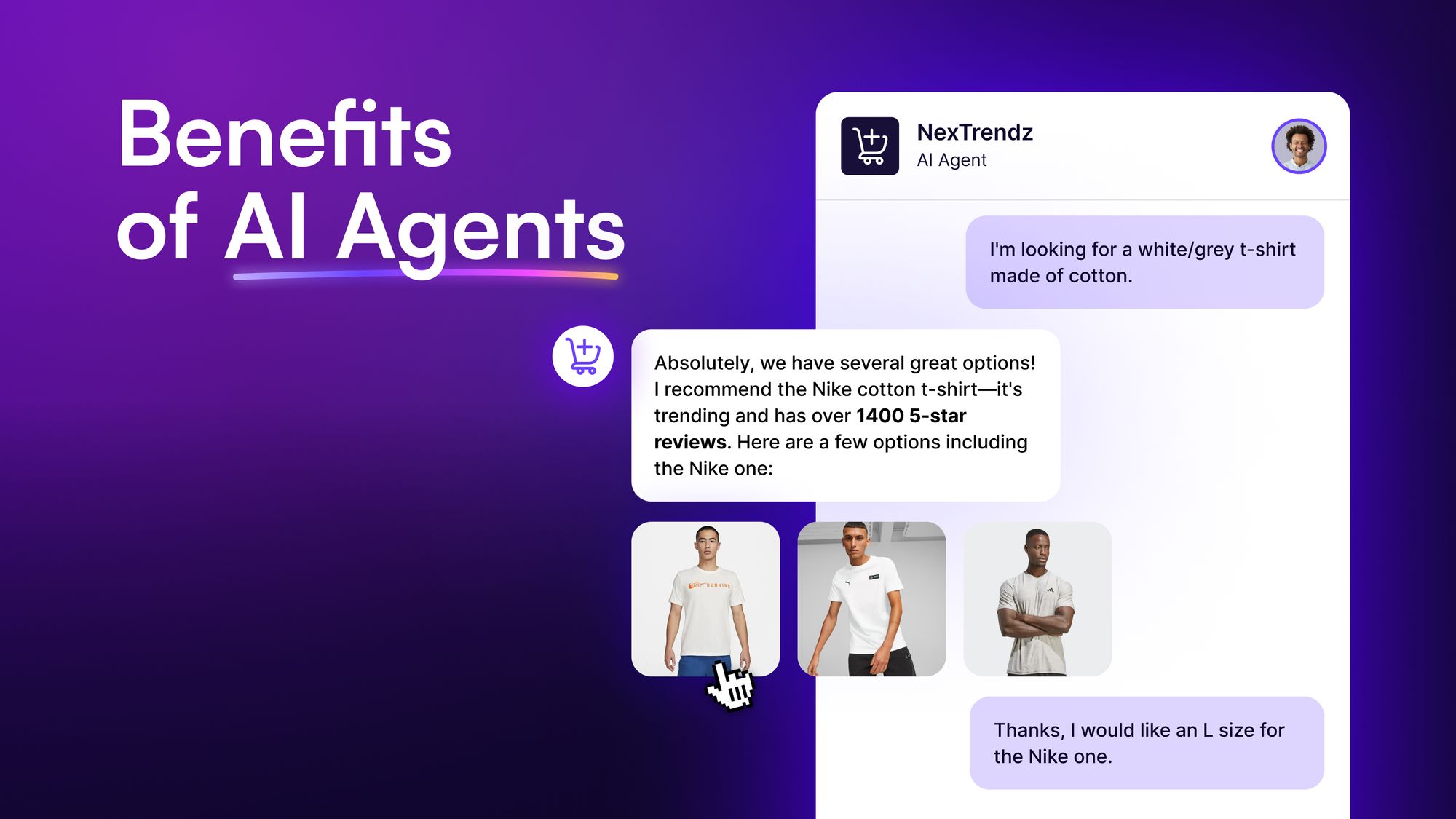

Read our detailed guide on what are AI agents to learn more about their types, capabilities, and benefits of using them for your business.

AI Agent Best Practices

When implementing AI agents in your business processes, here are some best practices to consider:

Implement Retrieval-Augmented Generation (RAG) to Reduce Hallucinations

You must know the famous ChatGPT “strawberry” example, where ChatGPT says there are two “r”s in the word instead of three.

Despite the answer being so clear to the human eye, the AI somehow answers it wrong. That’s because of AI hallucinations, one of the common issues found when implementing AI agents.

When AI hallucinates, it passes on incorrect answers as absolutely correct.

Since AI agents are autonomous and make and execute decisions, these errors can erode trust, lead to misinformation, and negatively impact decision-making processes. That’s why, it’s crucial to reduce hallucinations in AI agents as much as possible.

How can you do that? By implementing retrieval-augmented generation (RAG).

What is RAG?

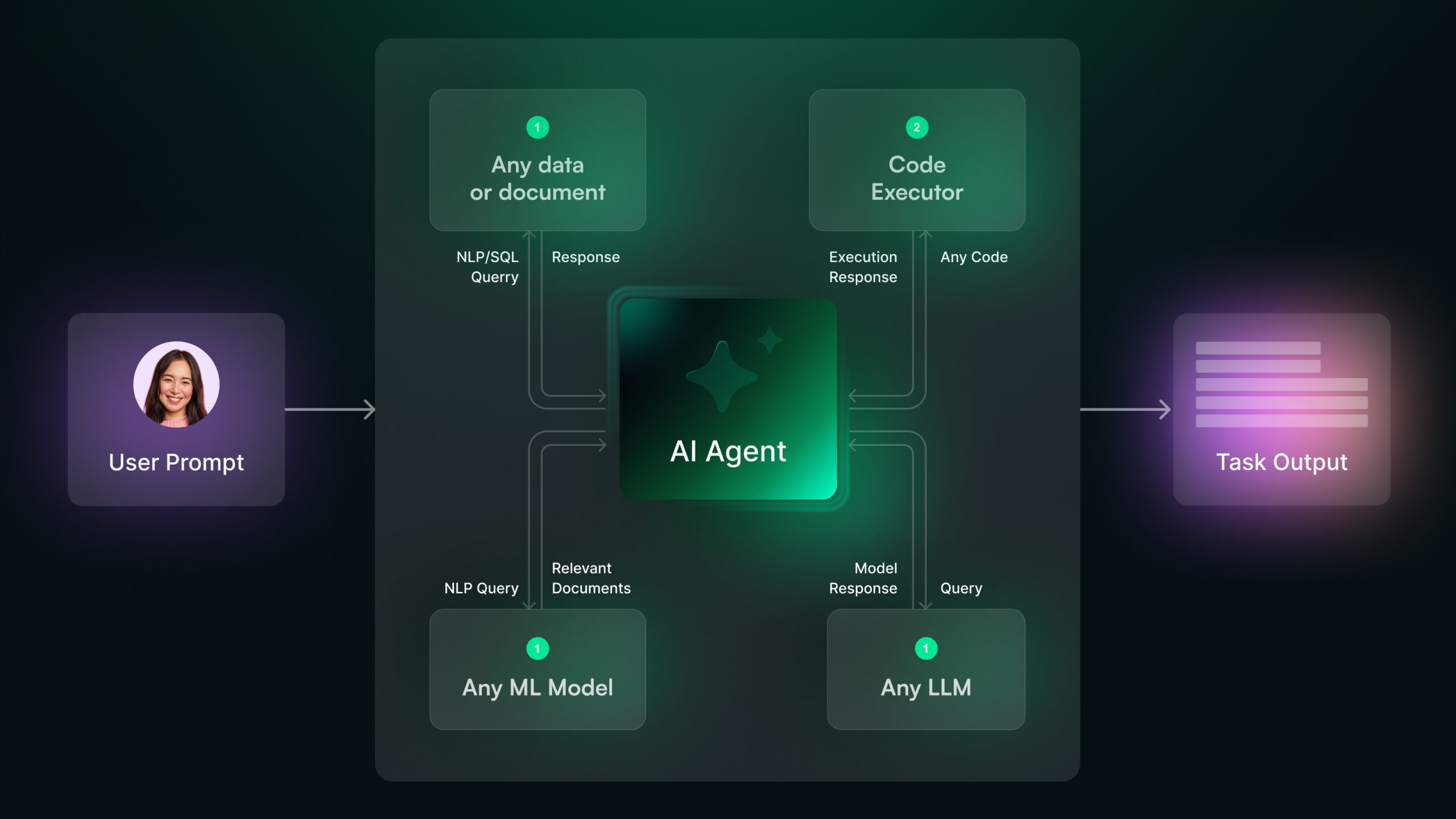

Retrieval-Augmented Generation (RAG) is a technique that enhances AI agents by integrating them with external databases or knowledge repositories. By retrieving relevant, accurate data from these sources, RAG ensures that AI outputs are both reliable and up-to-date.

By using RAG, you can reduce hallucinations to a considerable extent in AI agents. Many businesses are already implementing this technique to get accurate and more reliable results.

In fact, Acurai, an AI service, has recently announced a 100% elimination of hallucinations using RAG.

If you’re using AI agents, it’s best to choose an AI agent that has RAG included. For example, Chatsonic, an AI marketing agent, conducts RAG on top of web as well as user-uploaded documents to ensure reliable responses.

This’ll save you the additional hassle of implementing RAG separately and also ensure accuracy throughout your AI process.

Start with Focused Use Cases for Maximum Impact

Before you use AI agents, it’s best to zero in on which processes you want to utilize AI for. This’ll allow you to choose purpose-specific AI agents instead of general ones.

AI agents designed for specific tasks often outperform their general-purpose counterparts. That’s because, focused AI agents are trained and optimized for particular scenarios, allowing them to provide specific solutions. This approach isn’t usually found in general-purpose AI agents, which require broader training and may struggle to meet niche demands effectively.

Take Chatsonic, for example. It’s designed specifically for marketing tasks and excels in creating personalized content, crafting social media strategies, and generating ad copy.

If you try to do similar tasks with a general-purpose bot, say ChatGPT, you’ll still get results. But they will be much more generic and of lower quality, which may not serve the purpose of implementing an AI agent.

By starting with a clear, focused use case, you can maximize the impact of their AI investments while minimizing the complexities of implementation.

Conduct Continuous Evaluation for Reliability

Deploying an AI agent isn’t a set-it-and-forget-it task. Continuous evaluation is important to ensure reliability, relevance, and accuracy, particularly in dynamic environments.

That’s because AI agents interact with datasets and user needs that continuously change. Without regular assessments, they risk becoming outdated, inconsistent, or prone to errors.

However, continuously evaluating AI agents comes with its own challenges:

- The sheer volume of data and interactions makes manual evaluation impractical.

- Maintaining consistent standards across evaluations can be difficult.

Many companies solve this by using human-based and LLM-based evaluation methods. To handle large query volumes in human-based evaluation, you can use crowdsourcing or end-user feedback.

However, these processes still require considerable resources and time. For quicker, more reliable output evaluations, try newer methods like “LLM-as-a-judge.” In this method, LLMs evaluate the outputs of AI agents continuously, providing a reliable and scalable method to assess AI outputs as compared to manual evaluation.

Integrate Human Oversight to Maintain Control

We’ve already discussed AI hallucinations. However, they are only one among the many ways an AI can give wrong outputs. Sometimes, AI agents might even experience downtime, or make decisions that aren’t in tune with what you expect.

It might also show biases or give out potentially harmful information, all of which should be monitored.

This is where human oversight comes in. It ensures that AI systems operate ethically, responsibly, and within acceptable boundaries. By maintaining control over AI processes, organizations can avoid errors, reduce risks, and foster trust in AI deployments.

However, one of the main goals of deploying AI agents is to cut down on human input. In such cases, how can you maintain human oversight while still reducing manual intervention?

The key is striking a balance between automation and human involvement. AI should handle repetitive, low-stakes tasks, freeing humans to focus on monitoring and governance. You can also implement two frameworks to ensure proper human supervision:

- Human-in-the-loop (HITL): These systems involve humans at all critical decision points.

- Human-on-the-loop (HOTL): These systems require humans to supervise and intervene only when necessary.

Take content moderation processes for example. AI agents can flag potentially harmful posts, but human moderators make the final decision to avoid over-censorship or missing cultural nuances. Similarly, in healthcare, AI can assist in diagnosing conditions, but doctors ensure the final call is based on patient context and experience.

By integrating human oversight into AI agent workflows, you can ensure better reliability, ethical compliance, and trust in your systems. This hybrid model helps you deliver better outcomes while maintaining accountability and control.

Optimize Resource Usage for Cost-Effectiveness

AI agents, due to their more advanced nature, consume more energy and resources as well. For example, OpenAI’s GPT-3 uses around 1,300 megawatt-hours (MWh) of electricity per year, which is equivalent to the yearly consumption of about 130 US homes in 2022.

What does it mean for a business? If you’re deploying AI agents, you need to be aware of energy and resource consumption from both a cost perspective and as an ethical consideration.

In fact, global companies are considering AI resource optimization a top priority. For instance, the Canadian Artificial Intelligence Safety Institute (CAISI), launched in November 2024, has been at the forefront of promoting sustainable AI development. One of its core objectives is addressing the resource challenges associated with AI technologies.

Fortunately, there are multiple ways to optimize the resource usage of AI agents.

One way is by adopting lean AI methodologies. This approach involves designing lightweight AI models that deliver high performance while minimizing computational demands. By stripping away unnecessary complexities, lean AI focuses on achieving results efficiently.

Another method is to fine-tune pre-trained AI models. In this approach, you use pre-trained AI models or AI agents built for specific applications instead of training an entirely new one — reducing the resources used during training.

Choose Appropriate Pricing Models

Implementing AI agents can be a major investment, considering all the costs of implementation, usage, and maintenance. However, you can make the AI strategy cost-effective and practical by choosing the right pricing model.

For example, Devin AI, an AI software developer, claims to save costs of up to 20x. However, it’s priced at $500/team, which might be suitable only for larger teams. For smaller teams and individuals, paying $500 a month for a solution may not translate into 20x cost savings.

Majorly, you can choose between two pricing models: subscription-based and the newer usage-based.

Subscription-based plans offer predictable monthly costs, ideal for businesses with steady workloads. In contrast, usage-based models are highly flexible, allowing companies to pay only for the resources they consume. This is particularly beneficial for organizations with fluctuating or unpredictable demands.

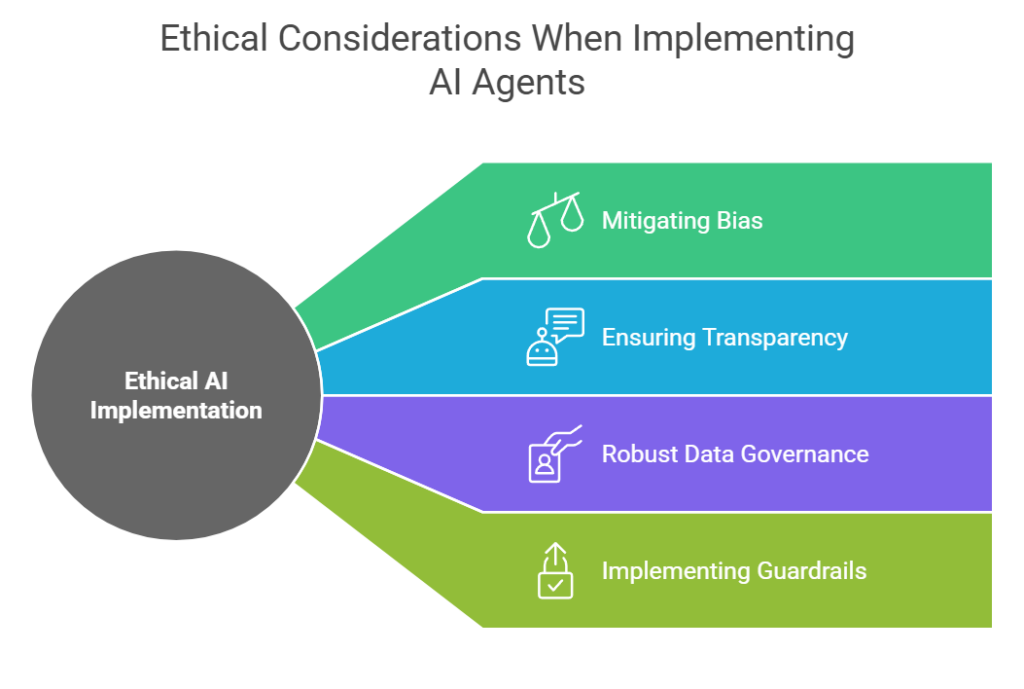

AI Agent Best Practices: The Ethical Considerations

Apart from best practices, you should also remember these ethical considerations when implementing AI agents:

Mitigate Bias Through Rigorous Testing

Imagine working in an environment where discrimination or unfair treatment undermines productivity and morale. Now, consider how similar issues in AI systems can impact users and businesses.

AI agents, like human employees, must create a fair and healthy environment, free from discrimination, hate speech, or extreme ideologies. However, even if AI agents technically don’t have “emotions,” they are still prone to biases.

Why does bias in AI agents occur?

AI models are trained on vast datasets that often reflect societal biases. These biases can be encoded into AI systems, leading to outputs that increase stereotypes or unfair treatment.

For instance, an AI recruitment tool trained on historical hiring data might favor certain demographics over others, simply because of patterns in the training data.

To mitigate these biases, many companies are already building tools that scan outputs and train AI agents better. For instance, Latimer, an AI business, has recently launched a Chrome browser extension to detect biases in AI texts.

However, if you want to use AI agents for your business eliminating these biases is crucial both as an AI agent best practice and ethical consideration. Here are some strategies you can adopt:

- Diverse and representative training data: Ensure the datasets used for training AI agents include diverse demographics, cultures, and scenarios. For example, an AI customer service agent trained on a global dataset is less likely to favor one region’s language or dialect over another.

- Regular auditing: Conduct ongoing evaluations to identify and correct biased outputs. Tools such as Latimer AI’s Chrome extension are invaluable for maintaining fairness in AI interactions.

- Algorithmic fairness techniques: Employ methods like adversarial debiasing, which trains AI systems to minimize biases, or fair representation learning.

- Transparency and explainability: Develop AI systems that can clearly explain their decision-making processes. This transparency helps users identify potential biases and ensures accountability.

Ensure transparency and explainability

If you’re using AI, make it clear.

Transparency is crucial in building trust and maintaining accountability when deploying AI systems. Customers, employees, and stakeholders all need to be informed about where and how AI is being utilized within your organization.

That’s because transparency builds trust. In a recent study, 72% customers want to know when they’re conversing with an AI agent for instance. It’s also necessary for various compliance standards such as GDPR and the European Union AI Act.

Use Robust Data Governance

The rights to AI data and its usage is a topic of debate both from an ethical and legal standpoint. Still, the need for robust AI data governance, especially when using autonomous AI agents, is undeniable.

In a 2022 case, author Kristina Kashtanova produced a graphic novel titled Zarya of the Dawn using Midjourney, an AI-generative tool. While initially she got copyright over the content, the court changed its stance multiple times. Eventually, they granted her limited copyright protection to the components explicitly created by Kashtanova while excluding the AI-generated ones.

Unfortunately, this isn’t a one-off case. As the use of AI agents rises, it underscores a critical question: Who owns AI-generated data and creations? It’s a gray area in law and ethics that urgently needs clear regulations.

If you’re also implementing AI agents in your system, here are some steps you can take to ensure proper data governance:

- Audit Data Sources: Regularly review and document the origins of datasets used to train AI systems, ensuring they meet ethical and legal standards.

- Implement Data Protection Measures: Use encryption, access controls, and anonymization to safeguard sensitive data against breaches.

- Foster Transparency: Clearly communicate to users how their data is being utilized, whether for training AI or generating insights.

- Engage in Policy Advocacy: Collaborate with industry peers and policymakers to shape fair and practical AI governance frameworks.

- Stay Updated on Regulations: Monitor developments like the EU AI Act to ensure compliance and maintain ethical AI practices.

Implement Effective Guardrails

Guardrails are a set of rules or guidelines that ensure all your AI agent systems operate within certain guidelines and rules. This includes everything from company policies and best practices, to legal regulations and ethical guidelines.

Consider them as boundaries that the AI agents shouldn’t go outside of.

Why Are Guardrails Necessary?

AI agents are increasingly autonomous, capable of making decisions and executing actions without human intervention. However, this autonomy comes with risks, such as producing harmful outputs, breaching ethical standards, or acting beyond their intended scope. Guardrails act as checkpoints to mitigate these risks, protecting both users and organizations.

With guardrails, you can make the most out of AI agents while minimizing the risk of unwanted or harmful outputs. If you want to set up effective guardrails as an AI agent best practice, here are some guidelines to follow:

- Define clear operational limits: Set boundaries to ensure the AI does not exceed its intended role or misuse its capabilities.

Example: A customer support bot should only provide responses within its trained domain and escalate complex issues to a human agent.

- Establish ethical guidelines and frameworks: Create a set of ethical principles that govern AI actions, such as fairness, transparency, and non-discrimination.

Example: AI hiring tools should be programmed to avoid bias by adhering to predefined fairness criteria.

- Implement content filtering: Use pre-trained models and keyword filtering to block unacceptable outputs.

Example: A language model integrated into a social platform should filter hate speech, misinformation, and explicit content.

- Create fail-safe mechanisms: Incorporate fallback options, such as redirecting decisions to human supervisors during uncertain or high-stakes situations.

Example: An AI in a medical diagnosis tool should defer to a human doctor when encountering ambiguous cases.

Final Thoughts: AI Agents Best Practices and Ethical Considerations

As you bring in more and more AI agents in your business process, following AI agent best practices and ethical considerations becomes imperative.

However, while setting up guidelines and regulations is crucial, it’s also essential to use reliable and trusted AI agents that protect your data and make your processes hassle-free.

Chatsonic is one such reliable AI marketing agent that’s designed to simplify your marketing tasks. From content research and creation to optimization and publishing, Chatsonic can help you with all things marketing.

Ready to streamline your processes with AI agents? Try Chatsonic today.

![How to Scale Your Business Using B2B AI Agents [+ Tools to Try]](/wp-content/uploads/B2B-AI-Agents-scaled.jpg)

![40 AI Agent Use Cases Across Industries [+Real World Examples]](/wp-content/uploads/AI-Agent-Use-Cases-1-scaled.jpg)